Material Classification

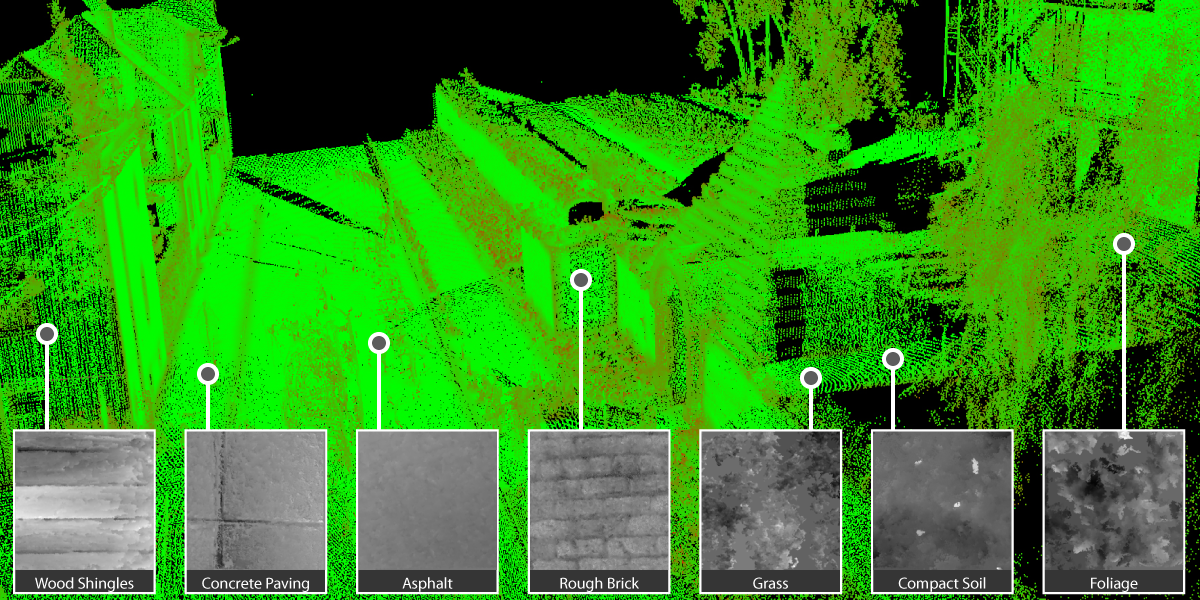

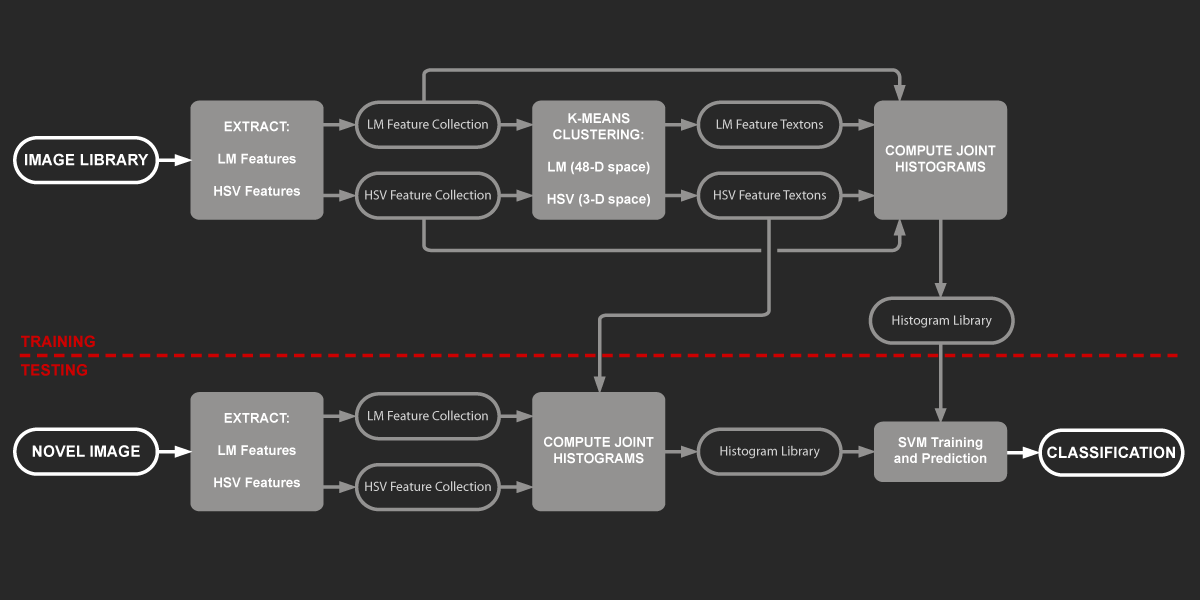

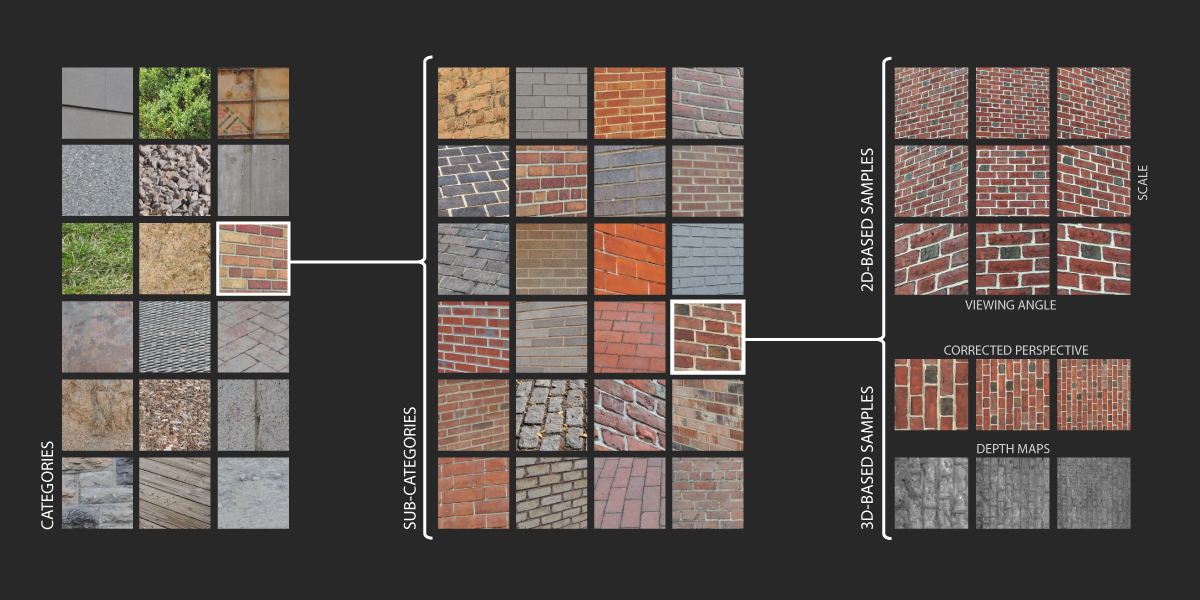

Automatically monitoring construction progress or generating Building Information Models using site images collections -- beyond point cloud data -- requires specific semantic information such as construction materials and interconnectivity to be recognized for building elements. In the case of materials such information can only be derived from appearance-based data contained in 2D imagery. Currently, the state-of-the-art texture recognition algorithms which are often used for recognizing materials are very promising (reaching over 95% average accuracy), yet they have mainly been tested in strictly controlled conditions and often do not perform well with images collected from construction sites (dropping to 70% accuracy and lower). In addition, there is no benchmark that validates their performance under real-world construction site conditions. To address these limitations, we propose a new robust vision-based method for material detection and classification from single images taken under unknown viewpoint and site illumination conditions. In the proposed algorithm, material appearance is modeled by a joint probability distribution of responses from a filter bank and principal Hue-Saturation-Value color values. This distribution is derived by concatenating frequency histograms of filter response and color clusters. These material histograms are classified using a multiple one-vs.-all chi-square kernel Support Vector Machine classifier. Classification performance is compared with the state-of-the-art algorithms both in computer vision and AEC communities. For experimental studies, a new database containing 20 typical construction materials with more than 150 images per category is assembled and used for validating the proposed method. Overall, for material classification an average accuracy of 97.1% for 200x200 pixel and 90.8% for 30x30 pixel image patches are reported. In cases where image patches are smaller than baseline size -- which can happen when an image is captured too far from a construction element -- our method can synthetically generate additional pixels and maintain a competitive accuracy to those reported above. The results show the promise of the applicability of the proposed method and expose the limitations of the state-of-the-art classification algorithms under real world conditions. It further defines a new benchmark that could be used to measure the performance of future algorithms.

Share this

Products

Results

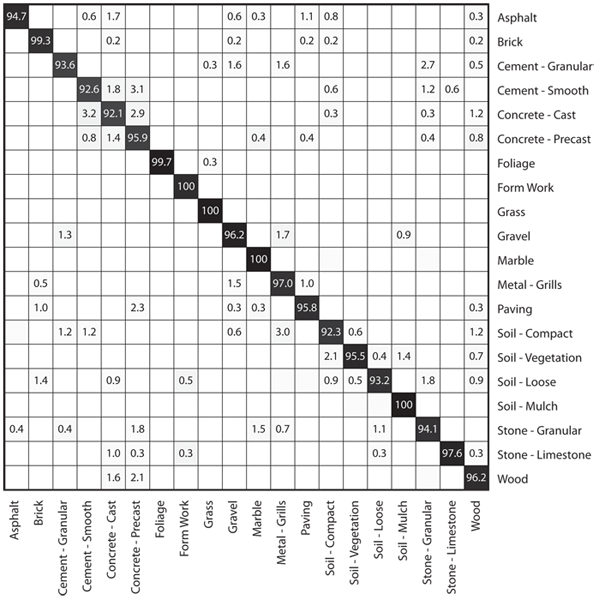

The following figure shows the confusion matrix on the performance of our proposed material detection and classification method on our Construction Materials Library (CML) which contains more than 3,000 images for 20 different construction materials. As observed in this figure, most material classifications have very high average accuracies (above 90%). It also shows several examples of cases where the classification of images were incorrect. In several categories such as cement and concrete classes, there is still 2-7% confusion among them. For these categories, human observation only based on the 200x200 images in the library may not result in right classification either, which is primarily related to the absence of context information.

Dataset

We have released the dataset described in our paper:

RAAMAC Construction Material Library (CML) 2013 - Dataset1

RAAMAC Construction Material Library (CML) 2013 - Dataset2

ReadMe.txt

Our dataset includes 2 packages which are numbered as Dataset1 and Dataset2. In our conference paper in the proceedings of 2012 CONVR, we only use Dataset1. For our new journal paper, we have added the new folder Dataset2 and used all both folders for our experimentation purposes. Within each folder, there are 20 catgegories of materials. Dataset1 contains color images of size 200 by 200 pixels as uncompressed BMP files. Dataset2 contains multiple versions of the Dataset1 images. These include different JPEG compression settings (CML_cXXX, where XXX is the JPEG compression setting), different subsampled image sizes (CML_XXX, where XXX indicates images are XXX by XXX pixels is size), and different synthetically expanded versions (CML_XXX_RR or CML_XXX_quilt, where images of size XXX by XXX have been expanded using the RR or quilt algorithm). The images in the CML are named as follows: set_a_b_c_d.bmp (or .jpeg if in Dataset2) where a is the physical instance of the material; b is the scale in inches, so 24 would mean the image represents roughly a 24 by 24 inch patch; c is the crop instance whithin a single phtograph; d is the orientation instance whithin one physical instance. Each material also includes a set of unorganized images named: uX.bmp, where X is the physical instance number.

THIS DATA SET IS PROVIDED "AS IS" AND WITHOUT ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE.

When using our dataset, please cite the following publication.

Code

Our code will be released upon acceptance of the paper under review...please stay tuned

publication

Dimitrov, A., and Golparvar-Fard, M.

Vision-based material recognition for automated monitoring of construction progress and generating building information modeling from unordered site image collections

Advanced Engineering Informatics, 28(1), 37–49, 2014.