Crowdsourcing construction workface assessment from jobsite videos

The advent of affordable jobsite cameras is reshaping the way onsite construction activities are monitored.

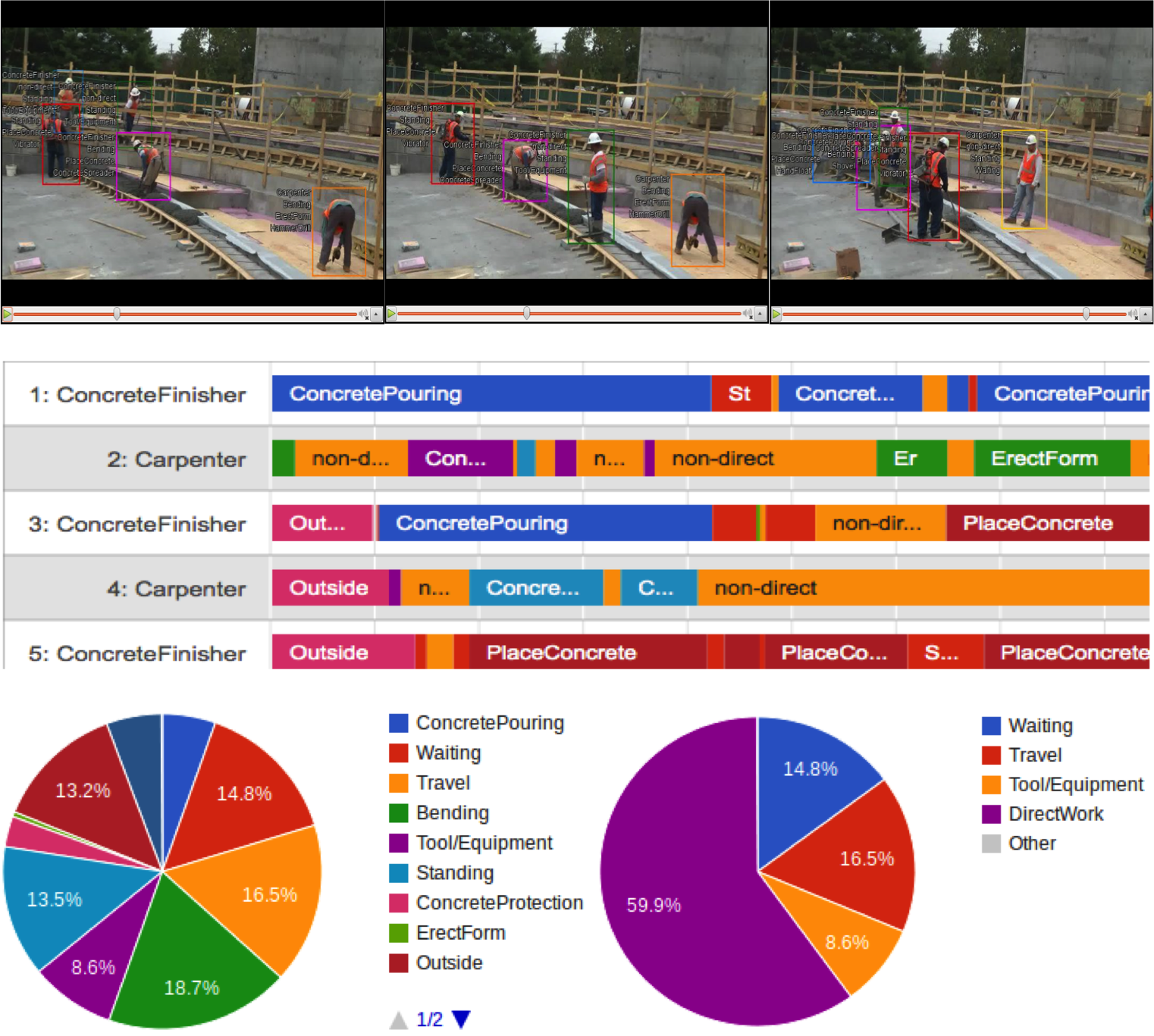

To facilitate the analysis of large collections of videos, research has focused on addressing the problem of manual workface assessment by recognizing worker and equipment activities using computer vision algorithms. Despite the explosion of these methods, the ability to automatically recognize and understand worker and equipment activities from videos is still rather limited. The current algorithms require large-scale annotated workface assessment video data to learn models that can deal with the high degree of intra-class variability among activity categories. To address current limitations, we propose crowd-sourcing the task of workface assessment from jobsite video streams. By introducing an intuitive web-based platform for massive marketplaces such as Amazon Mechanical Turk (AMT) and several automated methods, we engage the intelligence of the crowd for interpreting jobsite videos. We aim to overcome the limitations of the current practices of workface assessment, and also provide significantly large empirical datasets together with their ground truth that can serve as the basis for developing video-based activity recognition methods. Through six extensive experiments, we show that engaging non-experts on AMT to annotate construction activities in jobsite videos can provide complete and detailed workface assessment results with 85% accuracy. We show that crowdsourcing has potential to minimize time needed for workface assessment, provides ground truth for algorithmic developments, and most importantly allows onsite professionals to focus their time on the more important task of root-cause analysis and performance improvements.

Publications:

Liu, K., and Golparvar-Fard, M. "Crowdsourcing Construction Activity Analysis from Jobsite Video Streams", ASCE Journal of Construction Engineering and Management (under review).